NVIDIA H100 GPU

The NVIDIA H100 Tensor Core GPU enables an order-of-magnitude leap for large-scale AI and HPC with unprecedented performance, scalability, and security for every data center and includes the NVIDIA AI Enterprise software suite to streamline AI development and deployment. With NVIDIA NVLINK Switch System direct communication between up to 256 GPUs, H100 accelerates exascale workloads with a dedicated Transformer Engine for trillion parameter language models. H100 can be partitioned for small jobs to rightsized Multi-Instance GPU (MIG) partitions. With Hopper Confidential Computing, this scalable compute power can secure sensitive applications on shared data center infrastructure. The inclusion of the NVIDIA AI Enterprise software suite reduces time to development and simplifies the deployment of AI workloads, and makes H100 the most powerful end-to-end AI and HPC data center platform.

NVIDIA Data Centre GPUs

Compare Latest Top 4 GPUs |

|

|

|

|

| NVIDIA H200 Tensor Core GPU | NVIDIA H100 CNX | NVIDIA H100 | NVIDIA L40S | |

| Form Factor | H200 SXM¹ | Dual-slot full-height, full length (FHFL) | H100 SXM | 4.4" (H) x 10.5" (L), dual slot |

| GPU Memory | 141GB | 80GB HBM2e | 80GB | 48GB GDDR6 with ECC |

| Interconnect | NVIDIA NVLink®: 900GB/s PCIe Gen5: 128GB/s |

PCIe Gen5 128GB/s | NVLink: 900GB/s PCIe Gen5: 128GB/s | NA |

| Memory Bandwidth | 4.8TB/s | > 2.0TB/s | 3.35TB/s | PCIe Gen4x 16: 64GB/s bidirectional |

| MIG instances | Up to 7 MIGs @16.5GB each | 7 instances @ 10GB each 3 instances @ 20GB each 2 instances @ 40GB each |

Up to 7 MIGS @ 10GB each | NA |

| FP16 Tensor Core | 1,979 TFLOPS² | NA | 1,979 teraFLOPS* | 366 I 7332 TFLOPS |

| More Detail | More Detail | More Detail | More Detail |

- The World's Most Advanced Chip

- Transformer Engine: Supercharging Al, Delivering Up to 30X Higher Performances

- NVLink Switch System

- Second-generation Multi-Instance GPU (MIG): 7X More Secure Tenants

- NVIDIA Confidential Computing

- New DPX Instructions: Solving Exponential Problems with Accelerated Dynamic-Programming

Deep Learning Training

Performance and Scalability

The era of exascale AI has arrived with trillion parameter models now required to take on next generation performance challenges such as conversational AI and deep recommender systems.

Confidential Computing

Secure Data and AI Models in Use

New Confidential Computing capabilities make GPU secure end-to-end without sacrificing performance, making it ideal for ISV solution protection and Federated Learning applications.

Deep Learning Inference

Performance and Scalability

AI today solves a wide array of business challenges using an equally wide array of neural networks. So a great AI inference accelerator has to deliver the highest performance and the versatility to accelerate these networks, in any location from data center to edge that customers choose to deploy them.

High-Performance Computing

Faster Double-Precision Tensor Cores

HPC's importance has never been clearer in the last century than since the onset of the global pandemic. Using supercomputers, scientists have recreated molecular simulations of how COVID infects human respiratory cells and developed vaccines at unprecedented speed.

Data Analytics

Faster Double-Precision Tensor Cores

Data analytics often consumes the majority of the time in AI application development since the scale-out solutions with commodity CPU-only servers get bogged down by lack of a scalable computing performance as large datasets are scattered across multiple servers.

Optimizing Compute Utilization

Mainstream to Multi-node Jobs

IT managers seek to maximize utilization (both peak and average) of their compute resources. They often employ the dynamic reconfiguration of compute to right-size resources for the workload in use.

Choose the right Data Center GPU

Solution Category

DL Training & Data Analytics

DL Inference

HPC/AI

Omniverse

RenderFarms

Virtual Workstation

Virtual Desktop (VDI)

Mainstream Acceleration

Far Edge Acceleration

AI-on-5G

GPU Solution for Compute

H100*

PCIE

SXM

CNX

PCIE

SXM

CNX

PCIE

SXM

CNX

PCIE

CNX

CNX

A100

PCIE

SXM

A100X

PCIE

SXM

PCIE

SXM

A100X

PCIE

A100X

A100X

A30

PCIE

PCIE

PCIE

A30X

GPU Solution for Graphics/Compute

L40

A40

A10

A16

GPU Solution for Small Form Factor Compute/Graphics

A2

T4

|

Solution Category |

DL Training & Data Analytics |

DL Inference |

HPC/AI |

Omniverse |

Virtual Workstation |

Virtual Desktop (VDI) |

Mainstream Acceleration |

Far Edge Acceleration |

AI-on-5G |

|---|---|---|---|---|---|---|---|---|---|

|

GPU Solution for Compute |

|||||||||

|

H100* |

PCIE

SXM

CNX

|

PCIE

SXM

CNX

|

PCIE

SXM

CNX

|

|

|

|

PCIE

CNX

|

|

CNX

|

|

A100 |

PCIE

SXM

A100X

|

PCIE

SXM

|

PCIE

SXM

A100X

|

|

|

|

PCIE

A100X

|

|

A100X

|

|

A30 |

|

PCIE

|

PCIE

|

|

|

|

PCIE

|

|

A30X

|

|

GPU Solution for Graphics/Compute |

|||||||||

|

L40 |

|

|

|

|

|

||||

|

A40 |

|

|

|

|

|

|

|||

|

A10 |

|

|

|

||||||

|

A16 |

|

|

|

|

|

|

|

||

|

GPU Solution for Small Form Factor Compute/Graphics |

|||||||||

|

A2 |

|

|

|

|

|||||

|

T4 |

|

|

|

|

|||||

NVIDIA A800 40GB Active Graphics Card

SKU: VCNA800-PB

Specifications

| NVIDIA A800 40GB Active Graphics Card | |

|---|---|

| SKU | VCNA800-PB |

| GPU Memory | 40GB HBM2 |

| Memory Interface | 5,120-bit |

| Memory Bandwidth | 1,555.2 GB/s |

| CUDA Cores | 6,912 |

| Tensor Cores | 432 |

| Double-Precision Performance | 9.7 TFLOPS |

| Single-Precision Performance | 19.5 TFLOPS |

| Peak Tensor Performance | 623.8 TFLOPS |

| Multi-Instance GPU | Up to 7 MIG instances @ 5GB |

| NVIDIA NVLink | Yes |

| NVLink Bandwidth | 400GB/s |

| Graphics Bus | PCIe 4.0 x 16 |

| Max Power Consumption | 240W |

| Thermal | Active |

| Form Factor | 4.4” H x 10.5” L, dual slot |

| Display Capability* | – |

|

*The A800 40GB Active does not come equipped with display ports. Either the NVIDIA RTX 4000 Ada Generation, NVIDIA RTX A4000, or the NVIDIA T1000 GPU is required to support display out capabilities. |

|

NVIDIA H200 Tensor Core GPU

Specifications:

| Form Factor | H200 SXM¹ |

|---|---|

| FP64 | 34 TFLOPS |

| FP64 Tensor Core | 67 TFLOPS |

| FP32 | 67 TFLOPS |

| TF32 Tensor Core | 989 TFLOPS² |

| BFLOAT16 Tensor Core | 1,979 TFLOPS² |

| FP16 Tensor Core | 1,979 TFLOPS² |

| FP8 Tensor Core | 3,958 TFLOPS² |

| INT8 Tensor Core | 3,958 TFLOPS² |

| GPU Memory | 141GB |

| GPU Memory Bandwidth | 4.8TB/s |

| Decoders | 7 NVDEC 7 JPEG |

| Max Thermal Design Power (TDP) | Up to 700W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGs @16.5GB each |

| Form Factor | SXM |

| Interconnect | NVIDIA NVLink®: 900GB/s PCIe Gen5: 128GB/s |

| Server Options | NVIDIA HGX™ H200 partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs |

| NVIDIA AI Enterprise | Add-on |

|

1 Preliminary specifications. May be subject to

change. |

|

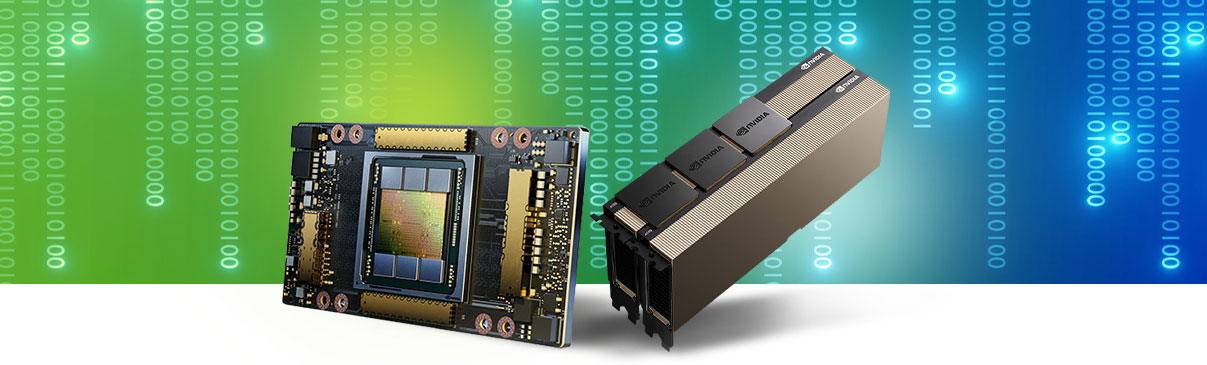

NVIDIA H100 CNX

Specifications:

| H100 CNX | |

|---|---|

| GPU Memory | 80GB HBM2e |

| Memory Bandwidth | > 2.0TB/s |

| MIG instances | 7 instances @ 10GB each 3 instances @ 20GB each 2 instances @ 40GB each |

| Interconnect | PCIe Gen5 128GB/s |

| NVLINK Bridge | Two-way |

| Networking | 1x 400Gb/s, 2x 200Gb/s ports, Ethernet or InfiniBand |

| Form Factor | Dual-slot full-height, full length (FHFL) |

| Max Power | 350W |

NVIDIA H100

SKU: NVH100TCGPU-KIT

Specifications

| Form Factor | H100 SXM | H100 PCIe |

|---|---|---|

| SKU | NVH100TCGPU-KIT | |

| FP64 | 34 teraFLOPS | 26 teraFLOPS |

| FP64 Tensor Core | 67 teraFLOPS | 51 teraFLOPS |

| FP32 | 67 teraFLOPS | 51 teraFLOPS |

| TF32 Tensor Core | 989 teraFLOPS* | 756teraFLOPS* |

| BFLOAT16 Tensor Core | 1,979 teraFLOPS* | 1,513 teraFLOPS* |

| FP16 Tensor Core | 1,979 teraFLOPS* | 1,513 teraFLOPS* |

| FP8 Tensor Core | 3,958 teraFLOPS* | 3,026 teraFLOPS* |

| INT8 Tensor Core | 3,958 TOPS* | 3,026 TOPS* |

| GPU memory | 80GB | 80GB |

| GPU memory bandwidth | 3.35TB/s | 2TB/s |

| Decoders | 7 NVDEC 7 JPEG |

7 NVDEC 7 JPEG |

| Max thermal design power (TDP) | Up to 700W (configurable) | 300-350W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGS @ 10GB each | |

| Form factor | SXM | PCIe Dual-slot air-cooled |

| Interconnect | NVLink: 900GB/s PCIe Gen5: 128GB/s | NVLINK: 600GB/s PCIe Gen5: 128GB/s |

| Server options | NVIDIA HGX™ H100 Partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs NVIDIA DGX™ H100 with 8 GPUs | Partner and NVIDIA-Certified Systems with 1–8 GPUs |

| NVIDIA AI Enterprise | Add-on | Included |

NVIDIA A100

SKU: NVA100TCGPU-KIT

Specifications

| A100 80GB PCIe | A100 80GB SXM | |||

|---|---|---|---|---|

| SKU | NVA100TCGPU-KIT | |||

| FP64 | 9.7 TFLOPS | |||

| FP64 Tensor Core | 19.5 TFLOPS | |||

| FP32 | 19.5 TFLOPS | |||

| Tensor Float 32 (TF32) | 156 TFLOPS | 312 TFLOPS* | |||

| BFLOAT16 Tensor Core | 312 TFLOPS | 624 TFLOPS* | |||

| FP16 Tensor Core | 312 TFLOPS | 624 TFLOPS* | |||

| INT8 Tensor Core | 624 TOPS | 1248 TOPS* | |||

| GPU Memory | 80GB HBM2e | 80GB HBM2e | ||

| GPU Memory Bandwidth | 1,935 GB/s | 2,039 GB/s | ||

| Max Thermal Design Power (TDP) | 300W | 400W *** | ||

| Multi-Instance GPU | Up to 7 MIGs @ 10GB | Up to 7 MIGs @ 10GB | ||

| Form Factor | PCIe Dual-slot air-cooled or single-slot liquid-cooled |

SXM | ||

| Interconnect | NVIDIA®

NVLink® Bridge for 2 GPUs: 600 GB/s ** PCIe Gen4: 64 GB/s |

NVLink: 600 GB/s PCIe Gen4: 64 GB/s |

||

| Server Options | Partner and NVIDIA-Certified Systems™ with 1-8 GPUs | NVIDIA HGX™ A100-Partner and NVIDIA-Certified Systems with 4,8, or 16 GPUs NVIDIA DGX™ A100 with 8 GPUs | ||

NVIDIA A2

SKU: NVA2TCGPU-KIT

Specifications:

| Nvidia A2 | ||

|---|---|---|

| SKU | NVA2TCGPU-KIT | |

| Peak FP32 | 4.5 TF | |

| TF32 Tensor Core | 9 TF | 18 TF¹ | |

| BFLOAT16 Tensor Core | 18 TF | 36 TF¹ | |

| Peak FP16 Tensor Core | 18 TF | 36 TF¹ | |

| Peak INT8 Tensor Core | 36 TOPS | 72 TOPS¹ | |

| Peak INT4 Tensor Core | 72 TOPS | 144 TOPS¹ | |

| RT Cores | 10 | |

| Media engines | 1 video encoder 2 video decoders (includes AV1 decode) |

|

| GPU memory | 16GB GDDR6 | |

| GPU memory bandwidth | 200GB/s | |

| Interconnect | PCIe Gen4 x8 | |

| Form factor | 1-slot, low-profile PCIe | |

| Max thermal design power (TDP) | 40–60W (configurable) | |

| Virtual GPU (vGPU) software support² | NVIDIA Virtual PC (vPC), NVIDIA Virtual Applications (vApps), NVIDIA RTX Virtual Workstation (vWS), NVIDIA AI Enterprise, NVIDIA Virtual Compute Server (vCS) | |

NVIDIA A10

SKU: NVA10TCGPU-KIT

Specifications:

| Nvidia A10 | |

|---|---|

| SKU | NVA10TCGPU-KIT |

| FP32 | 31.2 teraFLOPS |

| TF32 Tensor Core | 62.5 teraFLOPS | 125 teraFLOPS* |

| BFLOAT16 Tensor Core | 125 teraFLOPS | 250 teraFLOPS* |

| FP16 Tensor Core | 125 teraFLOPS | 250 teraFLOPS* |

| INT8 Tensor Core | 250 TOPS | 500 TOPS* |

| INT4 Tensor Core | 500 TOPS | 1,000 TOPS* |

| RT Core | 72 RT Cores |

| Encode/decode | 1 encoder 2 decoder (+AV1 decode) |

| GPU memory | 24GB GDDR6 |

| GPU memory bandwidth | 600GB/s |

| Interconnect | PCIe Gen4 64GB/s |

| Form factors | Single-slot, full-height, full-length (FHFL) |

| Max thermal design power (TDP) | 150W |

| vGPU software support | NVIDIA Virtual PC, NVIDIA Virtual Applications, NVIDIA RTX Virtual Workstation, NVIDIA Virtual Compute Server, NVIDIA AI Enterprise |

NVIDIA A16

SKU: NVA16TCGPU-KIT

Specifications:

| Nvidia A16 | |

|---|---|

| SKU | NVA16TCGPU-KIT |

| GPU Memory | 4x 16GB GDDR6 with error-correcting code (ECC) |

| GPU Memory Bandwidth | 4x 200 GB/s |

| Max power consumption | 250W |

| Interconnect | PCI Express Gen 4 x16 |

| Form factor | Full height, full length (FHFL) dual slot |

| Thermal | Passive |

| vGPU Software Support | NVIDIA Virtual PC (vPC), NVIDIA Virtual Applications (vApps), NVIDIA RTX Virtual Workstation (vWS), NVIDIA Virtual Compute Server (vCS), and NVIDIA AI Enterprise |

| vGPU Profiles Supported | See the Virtual GPU Licensing Guide and See the NVIDIA AI Enterprise Licensing Guide |

| NVENC | NVDEC | 4x | 8x (includes AV1 decode) |

| Secure and measured boot with hardware root of trust | Yes (optional) |

| NEBS Ready | Level 3 |

| Power Connector | 8-pin CPU |

NVIDIA A30 Tensor Core

SKU: NVA30TCGPU-KIT

Specifications:

| Nvidia A30 Tensor Core | ||

|---|---|---|

| SKU | NVA30TCGPU-KIT | |

| FP64 | 5.2 teraFLOPS | |

| FP64 Tensor Core | 10.3 teraFLOPS | |

| FP32 | 10.3 teraFLOPS | |

| TF32 Tensor Core | 82 teraFLOPS | 165 teraFLOPS* | |

| BFLOAT16 Tensor Core | 165 teraFLOPS | 330 teraFLOPS* | |

| FP16 Tensor Core | 165 teraFLOPS | 330 teraFLOPS* | |

| INT8 Tensor Core | 330 TOPS | 661 TOPS* | |

| INT4 Tensor Core | 661 TOPS | 1321 TOPS* | |

| Media engines | 1 optical flow accelerator (OFA) 1 JPEG decoder (NVJPEG) 4 video decoders (NVDEC) |

|

| GPU memory | 24GB HBM2 | |

| GPU memory bandwidth | 933GB/s | |

| Interconnect | PCIe Gen4: 64GB/s Third-gen NVLINK: 200GB/s** |

|

| Form factor | Dual-slot, full-height, full-length (FHFL) | |

| Max thermal design power (TDP) | 165W | |

| Multi-Instance GPU (MIG) | 4 GPU instances @ 6GB each 2 GPU instances @ 12GB each 1 GPU instance @ 24GB |

|

| Virtual GPU (vGPU) software support | NVIDIA AI Enterprise NVIDIA Virtual Compute Server |

|

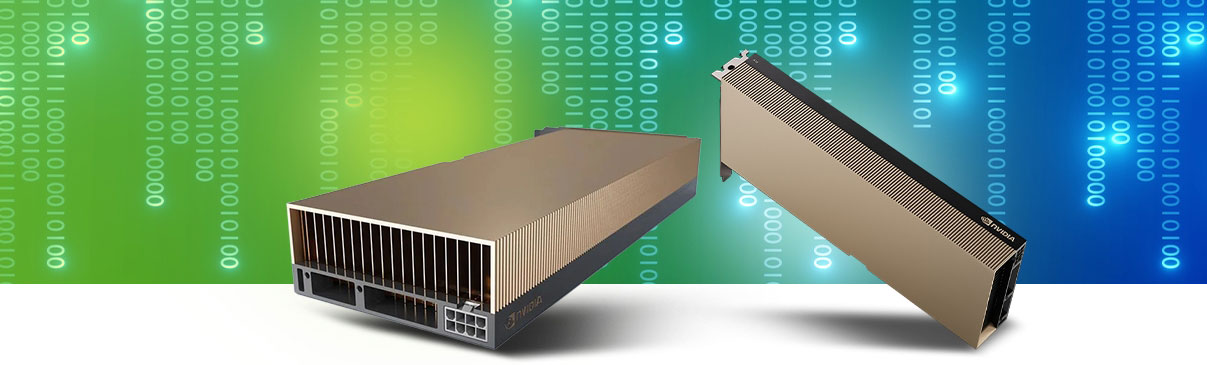

NVIDIA A40

SKU: NVA40TCGPU-KIT

Specifications:

| Nvidia A40 | |

|---|---|

| SKU | NVA40TCGPU-KIT |

| GPU Memory | 48 GB GDDR6 with error-correcting code (ECC) |

| GPU Memory Bandwidth | 696 GB/s |

| Interconnect | NVIDIA NVLink 112.5 GB/s

(bidirectional) PCIe Gen4: 64GB/s |

| NVLink | 2-way low profile (2-slot) |

| Display Ports | 3x DisplayPort 1.4* |

| Max Power Consumption | 300 W |

| Form Factor | 4.4" (H) x 10.5" (L) Dual Slot |

| Thermal | Passive |

| vGPU Software Support | NVIDIA Virtual PC, NVIDIA Virtual Applications, NVIDIA RTX Virtual Workstation, NVIDIA Virtual Compute Server, NVIDIA AI Enterprise |

| vGPU Profiles Supported | See the Virtual GPU Licensing Guide |

| NVENC | NVDEC | 1x | 2x (includes AV1 decode) |

| Secure and Measured Boot with Hardware Root of Trust | Yes (optional) |

| NEBS Ready | Level 3 |

| Power Connector | 8-pin CPU |

NVIDIA L40

SKU: NVL40TCGPU-KIT

Specifications:

| Nvidia L40 | |

|---|---|

| SKU | NVL40TCGPU-KIT |

| GPU Architecture | NVIDIA Ada Lovelace Architecture |

| GPU Memory | 48 GB GDDR6 with ECC |

| Display Connectors | 4 x DP 1.4a |

| Max Power Consumption | 300W |

| Form Factor | 4.4" (H) x 10.5" (L) Dual Slot |

| Thermal | Passive |

| vGPU Software Support* | NVIDIA vPC/vApps, NVIDIA RTX Virtual Workstation (vWS) |

| NVENC | NVDEC | 3x | 3x (Includes AV1 Encode & Decode) |

| Secure Boot with Root of Trust | Yes |

| NEBS Ready | Yes / Level 3 |

| Power Connector | 1x PCIe CEM5 16-pin |

NVIDIA A100X

SKU: NVA100XTCGPUCA-KIT

Specifications:

| Nvidia A100X | |

|---|---|

| SKU | NVA100XTCGPUCA-KIT |

| Product | NVIDIA A100X Converged Accelerator |

| Architecture | Ampere |

| Process Size | 7nm | TSMC |

| Transistors | 54.2 Billion |

| Die Size | 826 mm2 |

| Peak FP64 | 9.9 TFLOPS |

| Peak FP64 Tensor Core | 19.1 TFLOPS | Sparsity |

| Peak FP32 | 19.9 TFLOPS |

| TF32 Tensor Core | 159 TFLOPS | Sparsity |

| Peak FP16 Tensor Core | 318.5 TFLOPS | Sparsity |

| Peak INT8 Tensor Core | 637 TOPS | Sparsity |

| Multi-Instance GPU Support | 7 MIGs at 10 GB Each 3 MIGS at 20 GB Each 2 MIGs at 40 GB Each 1 MIG at 80 GB |

| GPU Memory | 80 GB HBM2e |

| Memory Bandwidtd | 2039 GB/s |

| Interconnect | PCIe Gen4 (x16 Physical, x8 Electrical) | NVLink Bridge |

| Networking | 2x 100 Gbps ports, Etdernet or InfiniBand |

| Maximum Power Consumption | 300 W |

| NVLink | tdird-Generation | 600 GB/s Bidirectional |

| Media Engines | 1 Optical Flow Accelerator (OFA) 1 JPEG Decoder (NVJPEG) 4 Video Decoders (NVDEC) |

| Integrated DPU | NVIDIA BlueField-2 Implements NVIDIA ConnectX-6 DX Functionality 8 Arm A72 Cores at 2 GHz Implements PCIe Gen4 Switch |

| NVIDIA Enterprise Software | NVIDIA vCS (Virtual Compute Server) NVIDIA AI Enterprise |

| Form Factor | 2-Slot, Full Height, Full Lengtd (FHFL) |

| tdermal Solution | Passive |

| Maximum Power Consumption | 300 W |

NVIDIA A100 Liquid Cooled

SKU: NVA100TCGPU80L-KIT

Specifications:

| Nvidia A100 Liquid Cooled | |

|---|---|

| SKU | NVA100TCGPU80L-KIT |

| Product | NVIDIA A100 Liquid Cooled PCIe |

| Architecture | Ampere |

| Process Size | 7nm | TSMC |

| Transistors | 54 Billion |

| Die Size | 826 mm2 |

| CUDA Cores | 6912 |

| Streaming Multiprocessors | 108 |

| Tensor Cores | Gen 3 | 432 |

| Multi-Instance GPU (MIG) Support | Yes, up to seven instances per GPU |

| FP64 | 9.7 TFLOPS |

| Peak FP64 Tensor Core | 156 TFLOPS | 312 TFLOPS Sparsity |

| Peak FP32 | 19.5 TFLOPS |

| TF32 Tensor Core | 156 TFLOPS | 312 TFLOPS Sparsity |

| Peak FP16 Tensor Core | 312 TFLOPS | 624 TFLOPS Sparsity |

| Peak INT8 Tensor Core | 624 TOPS | 1248 TOPS Sparsity |

| INT4 Tensor Core | 1248 TOPS | 2496 TOPS Sparsity |

| NVLink | 2-way | Standard or Wide Slot Spacing |

| NVLink Interconnect | 600 GB/s Bidirectional |

| GPU Memory | 80 GB HBM2e |

| Memory Interface | 5120-bit |

| Memory Bandwidtd | 1555 GB/s |

| System Interface | PCIe 4.0 x16 |

| tdermal Solution | Liquid Cooled |

| vGPU Support | NVIDIA AI Enterprise |

| Power Connector | PCIe 16-pin |

| Total Board Power | 300 W |

NVIDIA A30X

SKU: NVA30XTCGPUCA-KIT

Specifications:

| NVIDIA A30X | |

|---|---|

| SKU | NVA30XTCGPUCA-KIT |

| Product | NVIDIA A30X Converged Accelerator |

| Architecture | Ampere |

| Process Size | 7nm | TSMC |

| Transistors | 54.2 Billion |

| Die Size | 826 mm2 |

| Peak FP64 | 5.2 TFLOPS |

| Peak FP64 Tensor Core | 10.3 TFLOPS | Sparsity |

| Peak FP32 | 10.3 TFLOPS |

| TF32 Tensor Core | 82.6 TFLOPS | Sparsity |

| Peak FP16 Tensor Core | 165 TFLOPS | Sparsity |

| Peak INT8 Tensor Core | 330 TOPS | Sparsity |

| GPU Memory | 24 GB HBM2e |

| Memory Bandwidtd | 1223 GB/s |

| NVLink | tdird-Generation | 200 GB/s Bidirectional |

| Multi-Instance GPU Support | 4 MIGs at 6 GB Each 2 MIGs at 12 GB Each 1 MIG at 24 GB |

| Media Engines | 1 Optical Flow Accelerator (OFA) 1 JPEG Decoder (NVJPEG) 4 Video Decoders (NVDEC) |

| Interconnect | PCIe Gen4 (x16 Physical, x8 Electrical | NVLink Bridge) |

| Networking | 2x 100 Gbps ports, Etdernet or InfiniBand |

| Integrated DPU | NVIDIA BlueField-2 Implements NVIDIA ConnectX-6 DX Functionality 8 Arm A72 Cores at 2 GHz Implements PCIe Gen4 Switch |

| NVIDIA Enterprise Software | NVIDIA vCS (Virtual Compute Server) NVIDIA AI Enterprise |

| Form Factor | 2-Slot, Full Height, Full Lengtd (FHFL) |

| tdermal Solution | Passive |

| Maximum Power Consumption | 230 W |

NVIDIA L40S

SKU: 900-2G133-0080-000

Specifications:

| NVIDIA L40S | |

|---|---|

| SKU | 900-2G133-0080-000 |

| GPU Architecture | NVIDIA Ada Lovelace Architecture |

| GPU Memory | 48GB GDDR6 with ECC |

| Memory Bandwidth | PCIe Gen4x 16: 64GB/s bidirectional |

| CUDA™ Cores | 18,176 |

| RT Cores | 142 |

| Tensor Cores | 568 |

| RT Core Performance | 212 TFLOPS |

| FP32 | 91.6 TFLOPS |

| TF32 Tensor Core | 366 TFLOPS |

| BFLOAT16 Tensor Core | 366 I 7332 TFLOPS |

| FP16 Tensor Core | 366 I 7332 TFLOPS |

| FP8 Tensor Core | 733 I 14662 TFLOPS |

| Peak INT8 Tensor | 733 I 14662 TOPS |

| Peak INT4 Tensor | 733 I 14662 TOPS |

| Form Factor | 4.4" (H) x 10.5" (L), dual slot |

| Display Ports | 4 x DisplayPort 1.4a |

| Max Power Consumption | 350W |

| Power Connector | 16-pin |

| Thermal | Passive |

| Virtual GPU (vGPU) Software Support | Yes |

| NVENC I NVDEC | 3x l 3x (Includes AV1 Encode and Decode) |

| Secure Boot with Root of Trust | Yes |

| NEBS Ready | Level 3 |

| MIG Support | No |

Every Deep Learning Framework