Kick start Hadoop with right platformExclusively suited for Hadoop's multi server deployments

With data storage and high-performance parallel data processing

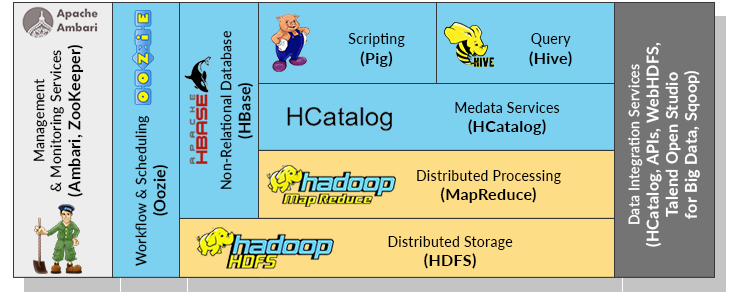

Hadoop is a solution to manage Big Data, it is framework for running data management applications on a large cluster built of commodity hardware. Key components include MapReduce, a computational technique that divides applications into many small fragments of work, and the Hadoop Distributed File System (HDFS), which stores data on the compute nodes. This unique combination delivers extreme aggregate bandwidth across the cluster, high performance parallel data processing, and automatic failover.

Hadoop ecosystem provides an integrated data processing platform that offers standard interfaces and methods which allow companies to establish a single resource for data processing.

Apache Hadoop, is the most efficient and effective Big Data infrastructure available.

Hadoop Hardware Vendor: If you have decided to deploy Hadoop, Iron provides hardware platform that is pre-tested and certified. Even though Hadoop runs on commodity hardware, it is important that you work with Iron to ensure the cluster is engineered properly for Hadoop and you get specialized technical support and services. In particular, specific requirements must be met for memory, core count and disk space.

Hadoop Software Vendor: Big Data is going to impact your company in the future, use a distribution that is supported by a good Hadoop software vendor so that you have software support when you need it.

To help companies prove the Big Data concept and determine the potential of Apache Hadoop, Iron has created a series of building blocks pre-integrated in a rack, a turnkey reference architecture. The platform includes all necessary networking, compute, storage and software, pre-configured and optimized for maximum performance and future scalability.